Individual Concerns with Recommendation Algorithms

Contents

12.2. Individual Concerns with Recommendation Algorithms#

Let’s look at some of the concerns that an individual user might have with recommendation algorithms.

12.2.1. How recommendations can go well or poorly#

Friends or Follows:#

Recommendations for friends or people to follow can go well when the algorithm finds you people you want to connect with.

Recommendations can go poorly when they do something like recommend an ex or an abuser because they share many connections with you.

Reminders:#

Automated reminders can go well in a situation such as when a user enjoys the nostalgia of seeing something from their past.

Automated reminders can go poorly when they give users unwanted or painful reminders, such as for miscarriages, funerals, or break-ups

Ads:#

Advertisements shown to users can go well for users when the users find products they are genuinely interested in, and for making the social media site free to use (since the site makes its money from ads).

Advertisements can go poorly if they become part of discrimination (like only showing housing ads to certain demographics of people), or reveal private information (like revealing to a family that someone is pregnant)

Content (posts, photos, articles, etc.)#

Content recommendations can go well when users find content they are interested in.

Content recommendations can go poorly when it sends people down problematic chains of content, like by grouping videos of children in a convenient way for pedophiles, or Amazon recommending groups of materials for suicide.

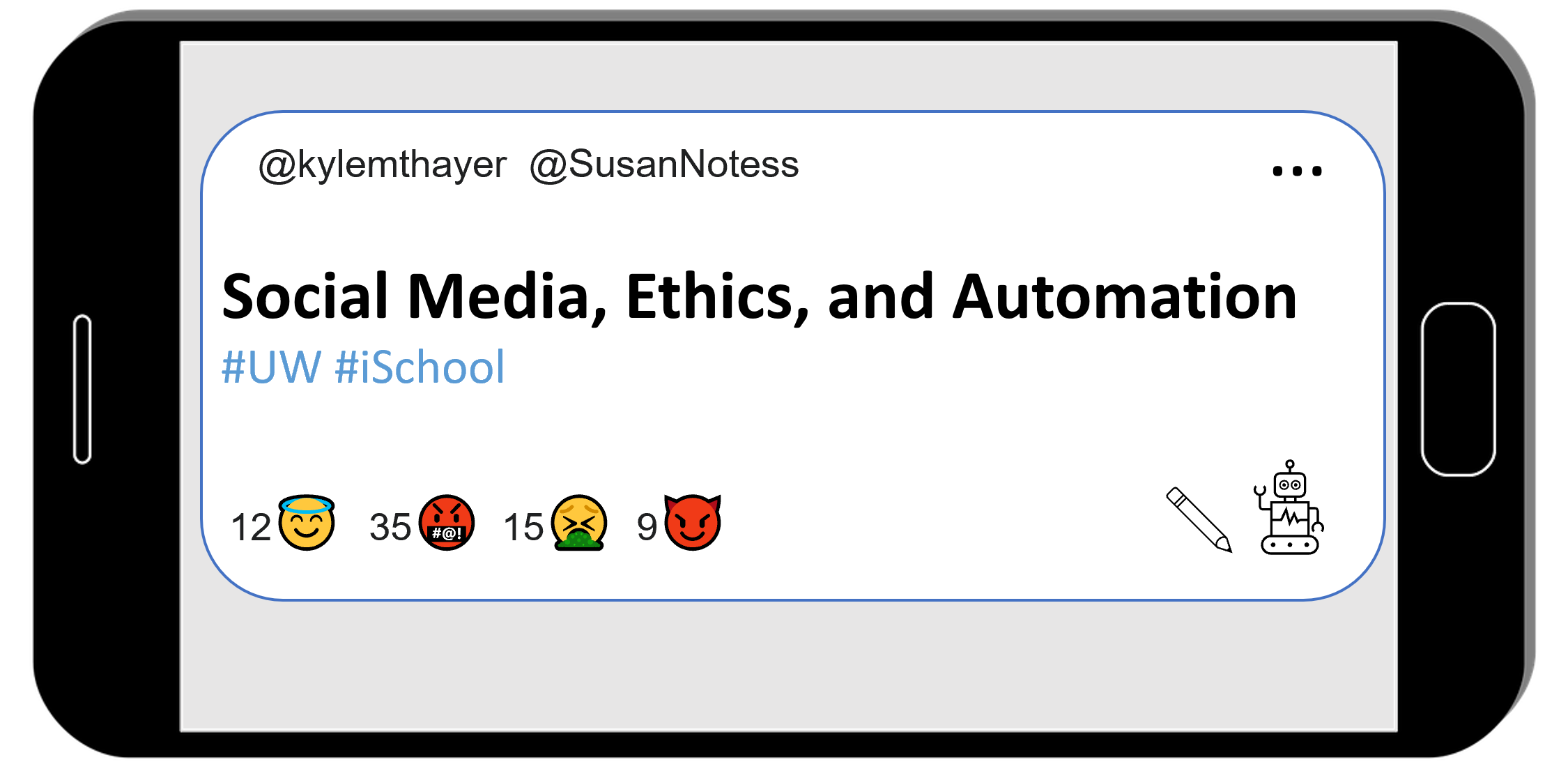

12.2.2. Gaming the recommendation algorithm#

Knowing that there is a recommendation algorithm, users of the platform will try to do things to make the recommendation algorithm amplify their content. This is particularly important for people who make their money from social media content.

For example, in the case of the simple “show latest posts” algorithm, the best way to get your content seen is to constantly post and repost your content (though if you annoy users too much, it might backfire).

Other strategies include things like:

Clickbait: trying to give you a mystery you have to click to find the answer to (e.g., “you won’t believe what happened when this person tried to eat a stapler!”). They do this to boost clicks on their link, which they hope boosts them in the recommendation algorithm, and gets their ads more views

Trolling: by provoking reactions, they hope to boost their content more

Coordinated actions: have many accounts (possibly including bots) like a post, or many people use a hashtag, or have people trade positive reviews

Youtuber F.D. Signifier explores the YouTube recommendation algorithm and interviews various people about their experiences (particularly Black Youtubers like himself) in this video (it’s very long, so we’ll put some key quotes below):

A recommendation algorithm like YouTube’s tries to discover categories of content, so the algorithm can recommend more of the same type of content. F.D. Signifier explains:

[A social media platforms] collects data on the viewing habits of each viewer and it uses that data to organize both viewers and channels into rabbit holes. All of this is automated by AI bots and machine learning. And all shade aside, it’s pretty impressive what they’re able to do. But these bots have no soul no conscience and most problematically no concept of social justice or responsibility.

[…]

Without this social responsibility effectively engineered, YouTube will and has funnel creators and viewers around in ways that reflect the biases and prejudices of the population it serves.

[…]

[As I follow YouTube recommendations] It’s far more likely that my biases will be confirmed and possibly even enhanced than they are to be challenged and re-evaluated. And it’s likely for a lot of consumers of YouTube that they will be segregated by political cultural and ethnic lines.

Since recommendation algorithm bases its decisions on how users engage with content, the biases of users plays into what gets boosted by the algorithm. For example, one common piece of advice on YouTube is for creators to put their faces on their preview thumbnail, but given that many users have a bias against Black people (whether intentional or not), this advice might not work:

In preparation for this video, and in just wanting to test out how to improve my channel’s reach, I took my Black face off of pretty much every thumbnail of any video that I’ve made up until this point. And the result was a clear uptick in views on each video that i did this for.

Additionally, because of how YouTube categorizes content, if someone tries to make content that doesn’t fill well in the existing categories, the recommendation algorithm might not boost it, or it might boost it in ill-fitting locations.

[Two problems happen with Black people trying to make educational content for Black audiences:]

Black viewers of educational channels are funneled into white content spaces and

Black content creators of educational content are funneled into really non-educational and in many cases toxic anti-Black content spaces.

12.2.3. Reflections#

What responsibilities do you think social media platforms should have in what their recommendation algorithms recommend?

What strategies do you think might work to improve how social media platforms use recommendations?