Societal Concerns with Recommendation Algorithms

Contents

12.3. Societal Concerns with Recommendation Algorithms#

Now let’s look at some larger societal concerns with the effects of recommendation algorithms.

12.3.1. Epistemic Bubbles / Echo Chambers#

One concern with how recommendation algorithms is that they can create echo chambers (or “epistemic bubbles”), where people get filtered into groups and the recommendation algorithm only gives people content that reinforces and doesn’t challenge their beliefs. These echo chambers allow people in the groups to freely have conversations among themselves without external challenge.

These echo chambers can include:

Hate groups, where people’s hate and fear of others gets reinforced and never challenged

Fan communities, where people’s appreciation of an artist, work of art, or something is assumed, and then reinforced and never challenged

Marginalized communities can find safe spaces where they aren’t constantly challenged or harassed (e.g., a safe space)

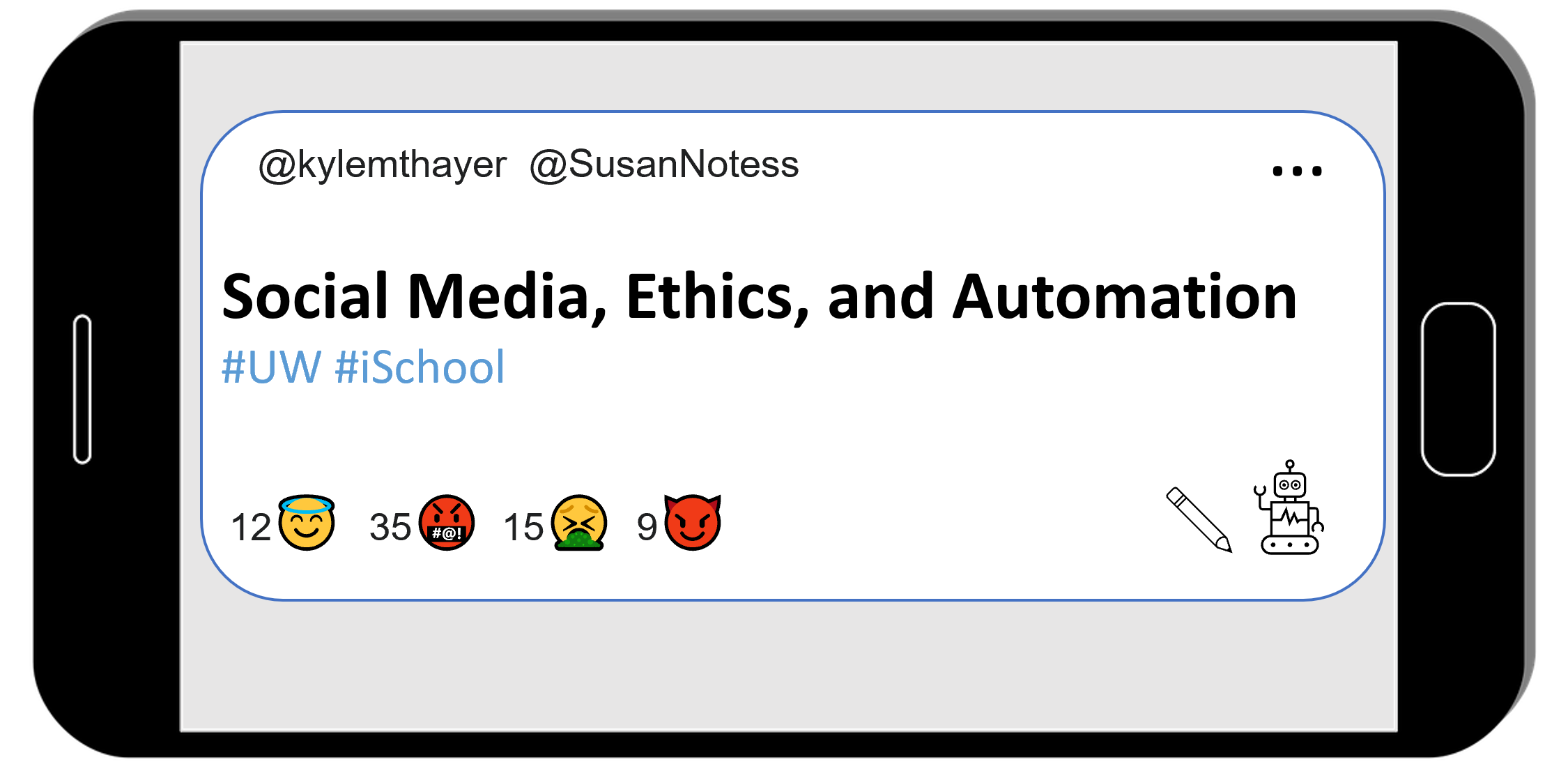

12.3.2. Amplifying Polarization and Negativity#

There are concerns that echo chambers increase polarization, where groups lose common ground and ability to communicate with each other. In some ways echo chambers are the opposite of context collapse, where contexts are created and prevented from collapsing. Though others have argued that people do interact across these echo chambers, but the contentious nature of their interactions increases polarization.

Along those lines, ff social media sites simply amplify content that gets strong reactions, they will often amplify the most negative and polarizing content. Recommendation algorithms can make this even works. For example: At one point, Facebook counted the default “like” reaction less than the “anger” reaction, which amplified negative content.

On Twitter, one study found (full article on archive.org):

“Whereas Google gave higher rankings to more reliable sites, we found that Twitter boosted the least reliable sources, regardless of their politics.”

According to another study on Twitter:

“An analysis […] suggested that when users swarm tweets to denounce them with quote tweets and replies, they might be cueing Twitter’s algorithm to see them as particularly engaging, which in turn might be prompting Twitter to amplify those tweets. The upshot is that when people enthusiastically gather to denounce the latest Bad Tweet of the Day, they may actually be ensuring more people see it than had they never decided to pile on in the first place.

That possibility raises serious questions of what constitutes responsible civic behavior on Twitter and whether the platform is in yet another way incentivizing combative behavior.”

Though this is a big concern about Internet-based social media, traditional media sources also play into this: For example, this study: Cable news has a much bigger effect on America’s polarization than social media, study finds

12.3.3. Radicalization#

Building off of the amplification polarization and negativity, there are concerns (and real examples) of social media (and their recommendation algorithms) radicalizing people into conspiracy theories and into violence.

Rohingya Genocide in Myanmar#

A genocide of the Rohingya people in Myanmar started in 2016, and in 2018 Facebook admitted it was used to ‘incite offline violence’ in Myanmar. In 2021, the Rohingya sued Facebook for £150bn over how Facebook amplified hate speech and didn’t take down inflammatory posts.

The Flat Earth Movement#

The flat earth movement (an absurd conspiracy theory that the earth is actually flat, and not a globe) gained popularity in the 2010s. As YouTuber Dan Olson explains it in his (rather long) video In Search of a Flat Earth:

Modern Flat Earth [movement] was essentially created by content algorithms trying to maximize retention and engagement by serving users suggestions for things that are, effectively, incrementally more concentrated versions of the thing they were already looking at. Bizarre cranks peddling random theories are an aspect of civilization that has always been with us, so it was inevitable that they would end up on YouTube, but the algorithm made sure they found an audience. These systems were accidentally identifying people susceptible to conspiratorial and reactionary thinking and sending them increasingly deeper into Flat Earth evangelism.

Dan Oleson then explained that by 2020, the flat earth content was getting less views:

The bottom line is that Flat Earth has been slowly bleeding support for the last several years.

Because they’re all going to QAnon.

See also: YouTube aids flat earth conspiracy theorists, research suggests

12.3.4. Discussion Questions#

What responsibilities do you think social media platforms should have in regards to larger social trends?

Consider impact vs. intent. For example, consequentialism only cares about the impact of an action. How do you feel about the importance of impact and intent in the design of recommendation algorithms?

What strategies do you think might work to improve how social media platforms use recommendations?